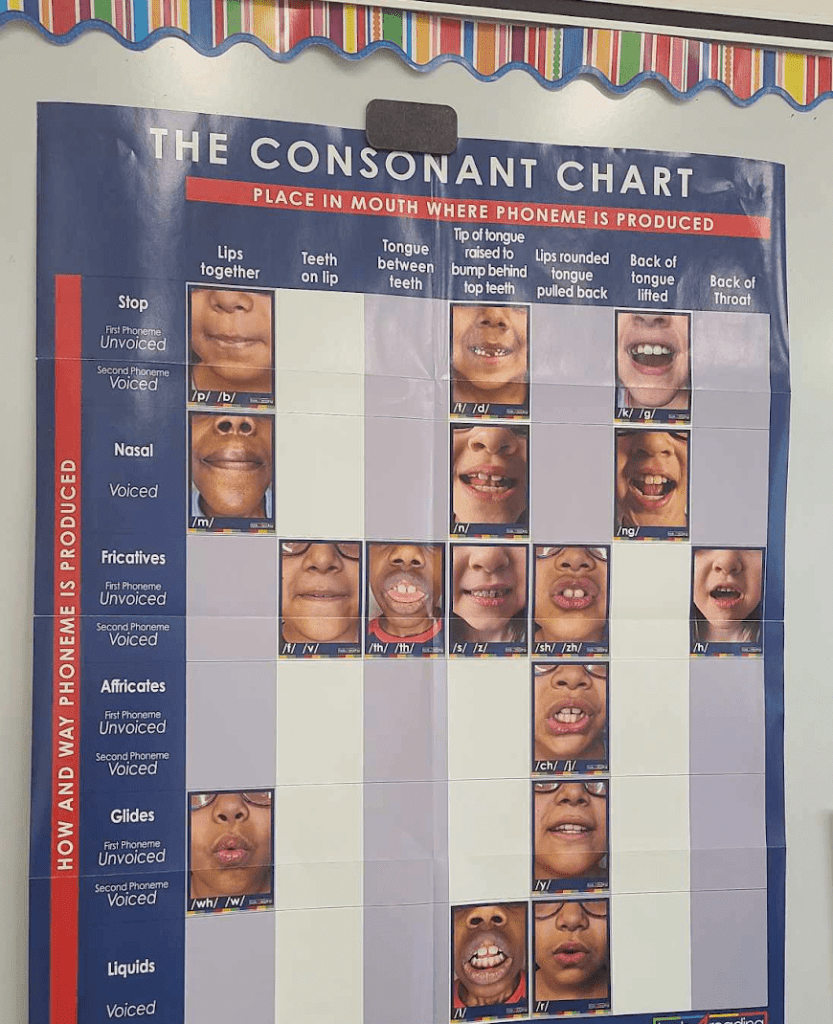

If you’re someone who’s interested in early literacy instruction, there is an excellent chance that you’ve heard of sound walls with articulatory gestures, a current hot topic in literacy. On this type of sound wall, speech sounds are laid out according to how they are produced. Consonants are arranged according to their place and manner of articulation and voicing. For example, the sound /b/ would be classified as a sound made with the lips, with completely stopped airflow, and with the voice turned on. Right next to it would be the /p/, which is the same as /b/, except with the voice turned off. As for the approximately 18 vowels, they are laid out in a valley shape, meant to represent tongue/jaw height and tongue backness. The speech sounds (or phonemes) are made visible by pictures of mouths producing each sound. The phonemes are linked with the letters (or graphemes) that commonly represent them, and a keyword picture is often also featured. Students are encouraged to consider their own speech production, taught the articulatory features of each phoneme and how to locate phonemes on the sound wall, to link to the corresponding graphemes. Having children attend to their production of speech sounds is proposed to enhance their learning of letter-sound correspondences, improve their phonemic awareness (the understanding that spoken words can be abstractly broken into phonemes, or speech sounds), and support their learning of reading decoding and spelling. In my work settings and on social media, there are lots of people asking about the efficacy for such an approach. As an SLP specializing in literacy and a university lecturer on reading disabilities and child speech disorders, this topic is pretty much the perfect intersection of my interests. I have dug in over the past few years and now aim to share some of the theory and research evidence, along with my own reflections on this trend. In this three-part blog series, we will first focus on the theoretical rationale for this approach. Part two will dive into the research evidence and part three will be my take on how we might proceed, based on the theory and evidence.

You might be wondering about the origins of this idea. Why should literacy teachers pay attention to speech production? For some answers to this, we need to dive into history. In the 1950s, psychologist Alvin Liberman and colleagues at Haskins Laboratory endeavoured to build a reading machine, an early attempt at the text-to-speech technology that we have today. In doing so, he discovered that it wasn’t so straightforward as lining up discrete phonemes one after another to create artificial speech. Liberman and colleagues discovered that there are no tidy boundaries between phonemes; rather, speech sounds overlap in time, in a phenomenon known as coarticulation. In fact, when researchers tried to string together consonant and vowel sounds, the result was mostly a string of unintelligible sounds. Because of this overlap of sounds, the acoustic cues for each phoneme vary widely, depending on the context; for example, the /d/ in “deep” is acoustically different from the /d/ in “do” (Liberman et al., 1957). Our words are actually sort of a blur of sound, rather than discrete acoustic building blocks. As a person who is literate in an alphabetic orthography, you might find this hard to believe, because you can easily imagine words being broken into sounds (of course you know that “cat” is /k + æ + t/), but to young children and people who have not learned to read in an alphabetic language (e.g., readers of the Japanese syllabary system), it is really not obvious that words can be broken down into abstract units of phonemes. “Cat” is just “cat” and not easily abstracted into /k + æ + t/.

Because the acoustic cues for any given phoneme vary depending on their context, Liberman theorized that there must be some other, more constant, cue that serves to distinguish phonemes for listeners. This led to the proposition of the motor theory of speech perception, which holds that the objects of our perception are the articulatory gestures of the speaker, rather than the acoustic signal itself. That is, the listener identifies a spoken [p] as the phoneme /p/ not because of its acoustic properties, but because they can recover the articulatory gesture of the speaker’s lips closing in a voiceless stop gesture. Different researchers have proposed different variations since Liberman’s inception of the motor theory, but the gist of gesturalist theories remains that we perceive speech largely via the motor system. Unsurprisingly, proponents of teaching articulatory gestures in early literacy often point to Liberman’s motor theory as theoretical support for the approach.

However, there is considerable debate surrounding speech perception, with many researchers arguing for an account that opposes motor theory, holding instead that speech perception is driven primarily by auditory processes, despite the wide variance in auditory cues that Liberman first identified (Diehl et al., 2004, Redford & Baese-Berk, 2023). The motor theory, in its original form, predicts that in order to perceive human speech, one must have a human vocal apparatus. But it turns out that categorical perception of human speech (e.g., /p/ vs /b/ sounds) has been demonstrated by several types of experimental subjects who are incapable of speech production including people with severe production impairments such as Broca’s aphasia (Hickok et al., 2011), very young babies (e.g. Eimas, 1971), and chinchillas (Kuhl & Miller, 1975). Though these findings are inconsistent with the original the motor theory, they of course do not rule out involvement of the motor system in speech perception. Indeed, there is evidence of a role of the motor system in speech perception, which we will now discuss.

In a fascinating study, researchers from UBC found that babies’ perception of dentalized [d] versus retroflex [d] was inhibited when they used a teether that blocked tongue tip movement, versus a teether that did not (Bruderer et al., 2015). Further, the well-known McGurk effect, (McGurk & MacDonald, 1975) is taken as support for motor involvement, or at least evidence against a purely auditory account. We can also look to evidence from brain imaging studies: for example, Fridriksson and colleagues found that a common neural network was involved in both producing speech and passive listening to speech (Fridriksson et al., 2009). More recently, it is suggested that the motor system facilitates perception during difficult listening conditions (e.g., a noisy party), but that the contribution of the speech motor system to perception is generally more modest (Stokes et al., 2019). Finally, a 2017 systematic review by Skipper and colleagues pointed out that asking whether the motor system plays any role in speech perception is far too simplistic an inquiry, and that there is a role of the motor system should be uncontroversial. Rather, given the complexity of both perception and production, we should seek to better understand what regions and networks in the brain play a role in perception, under what circumstances (Skipper et al., 2017). Long story short, there is a lot left to learn about speech perception, but it is safe to say that the original motor theory is no longer considered viable.

Are we getting lost in the theoretical weeds here? Maybe. But it seems to me that the nature and extent of motor involvement in speech perception matters to the discussion of teaching articulatory gestures in the context of literacy instruction. I would submit that appeals to the motor theory are best taken with a grain of salt. In any case, practices must be based on more than theory. Next time, we’ll look at the experimental evidence that examines the effectiveness of teaching articulatory gestures in the context of literacy instruction.

References:

Bruderer, A. G., Danielson, D. K., Kandhadai, P., & Werker, J. F. (2015). Sensorimotor influences on speech perception in infancy. Proceedings of the National Academy of Sciences, 112(44), 13531–13536. https://doi.org/10.1073/pnas.1508631112

Diehl, R. L., Lotto, A. J., & Holt, L. L. (2004). Speech Perception. Annual Review of Psychology, 55(1), 149–179. https://doi.org/10.1146/annurev.psych.55.090902.142028

Eimas, P. D. (1975). Auditory and phonetic coding of the cues for speech: Discrimination of the [r-l] distinction by young infants. Perception & Psychophysics, 18(5), 341–347. https://doi.org/10.3758/BF03211210

Fridriksson, J., Moser, D., Ryalls, J., Bonilha, L., Rorden, C., & Baylis, G. (2009). Modulation of Frontal Lobe Speech Areas Associated With the Production and Perception of Speech Movements. Journal of Speech, Language, and Hearing Research, 52(3), 812–819. https://doi.org/10.1044/1092-4388(2008/06-0197)

Hickok, G., Costanzo, M., Capasso, R., & Miceli, G. (2011). The role of Broca’s area in speech perception: Evidence from aphasia revisited. Brain and Language, 119(3), 214–220. https://doi.org/10.1016/j.bandl.2011.08.001

Kuhl, P. K., & Miller, J. D. (1975). Speech Perception by the Chinchilla: Voiced-Voiceless Distinction in Alveolar Plosive Consonants. Science, 190(4209), 69–72. https://doi.org/10.1126/science.1166301

Liberman, A. M., Cooper, F. S., Shankweiler, D. P., & Studdert-Kennedy, M. (1967). Perception of the speech code. Psychological Review, 74(6), 431–461. https://doi.org/10.1037/h0020279

Liberman, A. M., Harris, K. S., Hoffman, H. S., & Griffith, B. C. (1957). The discrimination of speech sounds within and across phoneme boundaries. Journal of Experimental Psychology, 54(5), 358–368. https://doi.org/10.1037/h0044417

Redford, M., & Baese-Berk, M. (2023). Acoustic Theories of Speech Perception. In M. Redford & M. Baese-Berk, Oxford Research Encyclopedia of Linguistics. Oxford University Press. https://doi.org/10.1093/acrefore/9780199384655.013.742

Skipper, J. I., Devlin, J. T., & Lametti, D. R. (2017). The hearing ear is always found close to the speaking tongue: Review of the role of the motor system in speech perception. Brain and Language, 164, 77–105. https://doi.org/10.1016/j.bandl.2016.10.004

Stokes, R. C., Venezia, J. H., & Hickok, G. (2019). The motor system’s [modest] contribution to speech perception. Psychonomic Bulletin & Review, 26(4), 1354–1366. https://doi.org/10.3758/s13423-019-01580-2

Whalen, D. H. (2019). The Motor Theory of Speech Perception. In D. H. Whalen, Oxford Research Encyclopedia of Linguistics. Oxford University Press. https://doi.org/10.1093/acrefore/9780199384655.013.404

2 thoughts on “Articulatory gestures in literacy instruction: Part 1- the theoretical rationale”